Why We Do It

Annual program assessment is intended to promote regular engagement in evidence-based, program planning in support of the faculty's goals for student learning and success. Ongoing attention to student achievement and related learning needs is particularly important for a new and growing institution. It is also essential to realizing and documenting our goals for excellence in undergraduate and graduate education.

At the undergraduate level, evidence of student learning and the student experience has supported program-level decision making in numerous ways over the last several years. Faculties have responded to assessment findings by adjusting pre-requisites, re-sequencing program curriculum, re-focusing assignments, adopting new pedagogies and developing new courses. Most importantly, perhaps, annual assessment has promoted faculty conversations around student learning and teaching, facilitating a more cohesive vision of our programs' educational intentions.

At the graduate level, program-level assessment continues to grow in concert with the expansion of our graduate offerings.

How We Do It

The exact methods for assessing student learning are determined by the program's faculty, in keeping with the faculty's ownership of the curriculum. The overarching goals for programs, however, are the same. These include:

- gathering evidence that yields actionable insights into student learning achievement and the student experience in relation to the expected program learning outcomes. The most effective strategies involve complementary lines of direct and indirect evidence designed to represent the cumulative impact of the program's curriculum on student learning and success at a given point in the degree.

- using broadly shared, programmatic criteria and standards to evaluate the evidence. Usually elaborated in the form of a rubric, the criteria and standards describe what students are able to do at the time of graduation if they have achieved a given program learning outcome.

- discussing results as a faculty and other stakeholders as appropriate, including graduate student instructors in the program.

- identifying and implementing actions to improve student learning and/or the student experience, as appropriate, recognizing that the action of making no change is also possible.

More specific descriptions of expectations for effective assessment practices and use of results are detailed in this rubric. Our goal is for all programs to consistently practice a "developed" level of assessment. We are moving toward that goal, recognizing that each program learning outcome poses its own unique assessment challenges.

To date, programs have used a rich variety of evidence. Examples of direct evidence for assessing undergraduate majors and minors include senior theses, embedded exam questions, portfolios (electronic and paper), papers and reports and ETS subject tests.

Example forms of indirect evidence include reflective writings within portfolios, program-specific surveys, embedded questions on the graduating senior survey, data from institutional surveys, careful review of syllabi, curriculum and curriculum maps, graduate student instructor (GSI) interviews and student interviews conducted by the Students Assessing Teaching Learning Program (SATAL).

These last two sources of indirect evidence have proven particularly helpful. GSI interviews provide insights into program curriculum alignment, student learning achievements based on both classroom and grading experiences, and the kinds of instructional activities and pedagogies employed by graduate students in support of course and program learning outcomes. SATAL is a free service available to programs.

The faculty of each program research student achievement of at least one program learning outcome annually. This work is guided by the program's faculty assessment organizer (FAO), with support from the school-based assessment coordinator. Methods, findings, actions and related resource implications are summarized in a formal “PLO” Report.

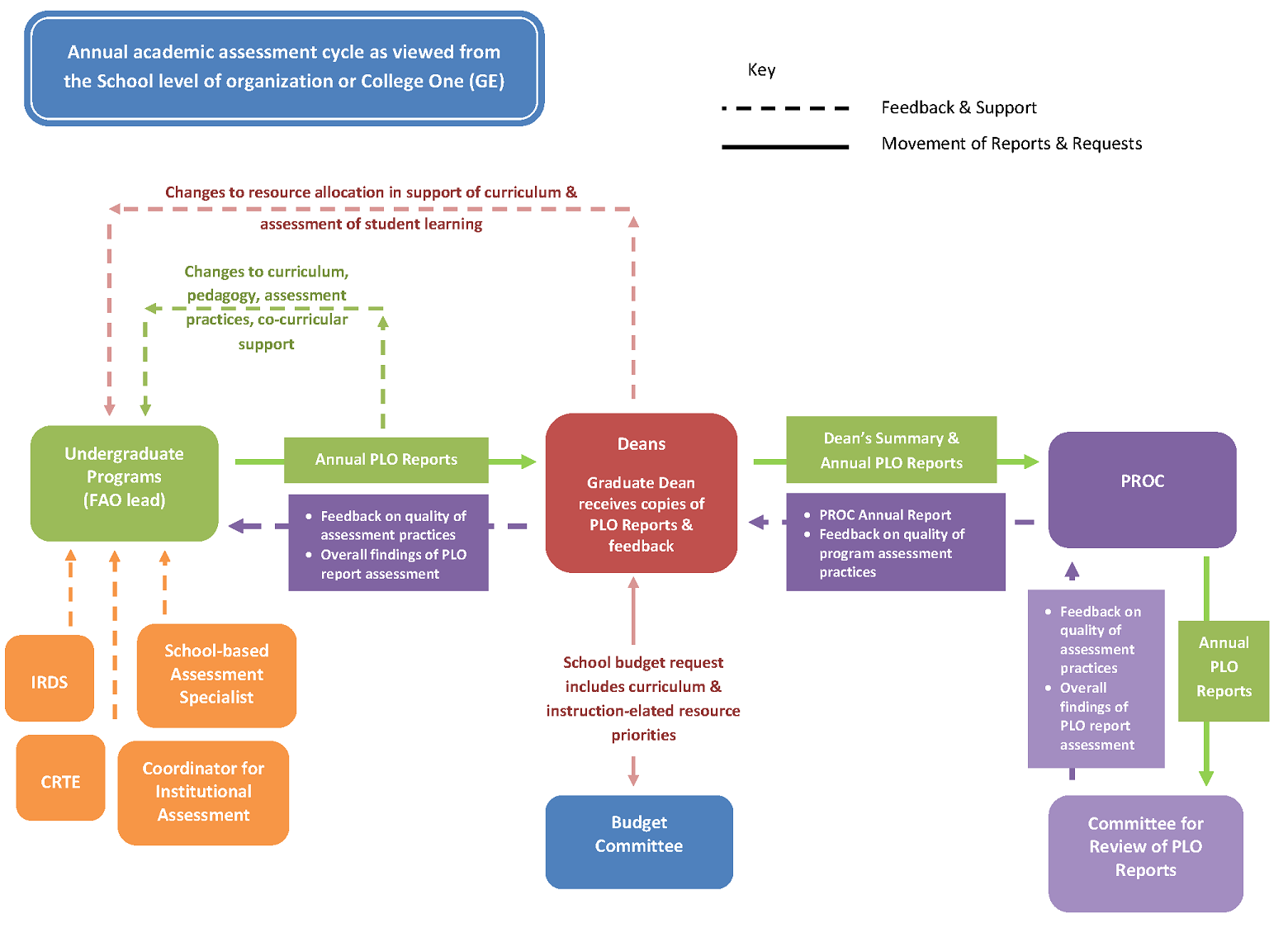

As depicted in the diagram below, each program’s report is:

- reviewed by the dean for resource implications. The dean’s summary, including resource priorities, is forwarded to PROC with the reports.

- reviewed by the Committee for the Review of PLO Reports. The committee uses a structured, rubric-based process to provide each program with constructive feedback on its assessment methods.

Click here to see this figure enlarged in a separate window.

To identify and address assessment practice and student learning trends, the committee's feedback and rubric scores are aggregated. A summary report, included recommended action items, is provided to PROC and shared with school deans, program faculty and the Campus Working Group on Assessment, and made available to the UC Merced campus community.

When We Do It

Every academic program summarizes its assessment activities annually, as guided by a report template. Reports are shared with the dean on Oct. 1 or March 1, depending upon program preference.